“In our rapidly evolving digital age, artificial intelligence (AI) has emerged as a groundbreaking technological frontier, transforming the way we interact with and understand the world around us. Among the remarkable advancements in AI, ChatGPT stands as a remarkable example of natural language processing capabilities. Developed by OpenAI, ChatGPT is an AI model that excels at understanding and generating human-like text, making it a powerful tool for communication, information retrieval, and problem-solving. Its potential applications span a wide range of fields, from customer support and content generation to education and healthcare. This essay delves into the multifaceted realm of ChatGPT, exploring its underlying technology, practical use cases, ethical considerations, and the broader implications it has for our society. By examining ChatGPT’s capabilities and limitations, we can gain a deeper understanding of the transformative impact it has on our daily lives and the myriad questions it raises about the future of AI and human-machine interactions” (OpenAI’s ChatGPT, written and edited by ChatGPT Model 3.5, Nov. 21. 2023).

The above was written by ChatGPT, an artificial intelligence model released by OpenAI in November 2022. While the writing generated by ChatGPT appears human-like, there have been three Community Honor Board cases regarding the use of ChatGPT at Branson this past year, according to Director of Studies Jeff Symmonds. This leads us to wonder what the role of AI should be in the classroom, and whether it should have a place in education at all.

To reach a common understanding, we believe it is essential to comprehend the purpose of education. School is designed to teach us various skills, ranging from arithmetic to calculus and from reading comprehension to analytical writing, in the hope that we will apply them in the real world. However, the truth is that few of us will use specific technical skills, like taking derivatives in calculus or writing literary analysis essays, in our careers. This raises the question: is there a purpose to learning something we may never actually use?

While this understanding of education is true and this concern valid, we interpret the crux of education to be teaching students how to learn — in other words, learning to learn. Yes, the knowledge we gain can be used for good, but many of the skills people use in their day-to-day lives are rarely rooted in this actual “knowledge” taught to us. It’s the critical thinking and problem-solving involved to better understand this material that matters most. Branson’s main purpose is to teach us how to learn, as our mission statement says, “to develop students who make a positive impact in the world.” We, as students, should strive to adapt and engage in the challenge of coming up with novel thought-provoking ideas. So, does AI help teach us how to learn? Our position: no — not really.

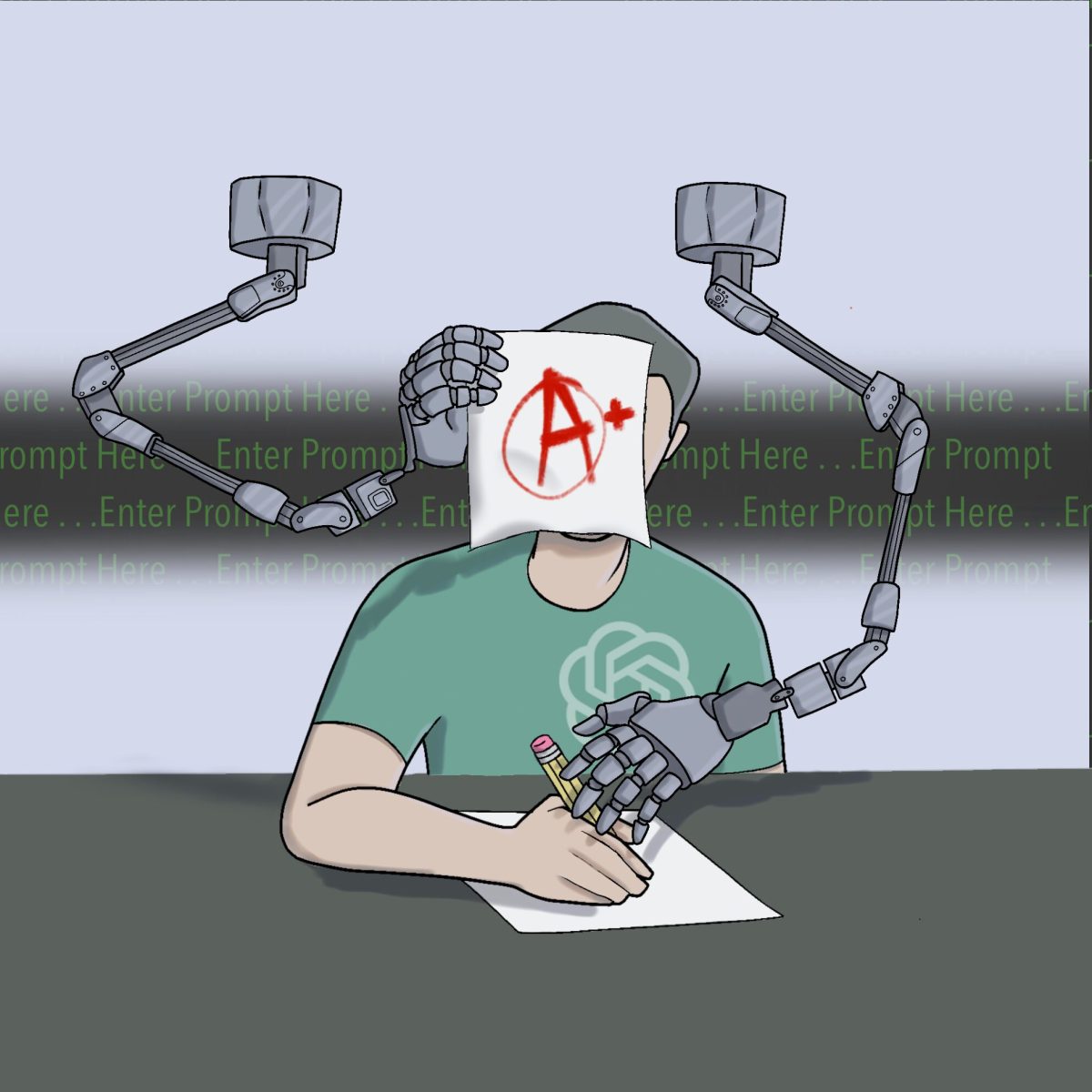

Rather than teaching us how to learn, and giving us the tools to be critical thinkers, AI, much like calculators and computers, offers a shortcut in information processing and problem-solving. However, unlike these tools, AI’s capabilities extend far beyond simple calculations or basic functions like word processing. It can generate detailed responses and essays based on minimal input, such as a prompt as simple as “write me an essay on X”. This convenience poses a risk as students might rely too heavily on AI, treating it as an “all-knowing” infallible source of information, and therefore missing out on developing critical thinking and research skills.

It’s also worth mentioning that Branson, as a private institution, is very different from many schools across the country. We have teachers who are willing to meet with us outside of class time and are engaged and enthusiastic in the content they are teaching. This leads to an elevated emphasis on critical thinking, and since paired with good teaching, we don’t need to look things up on ChatGPT for an “answer.” In fact, for many classes, the way you get to the answer is far more important than the actual answer. For example, writing is an exercise of critical analysis. There is no point in using ChatGPT, which will think for us while not teaching us this extraordinarily valuable skill.

Furthermore, these chatbots carry bias — even politically. Trained on an internet dataset, there are plenty of unreliable sources with inadequate citations or even none at all. With odd biases and opinions flowing through, we’re easily susceptible to these misguided information traps. Take for example a study on the safety of the Ford F-150. You’re not necessarily going to want to wholeheartedly believe in the test results performed by Ford Motors. Or a study on the ergonomic benefits of lawn chairs done by a lawn chair company — the study isn’t necessarily “bad,” but all the results should be taken with a grain of salt. We worry that AI’s cumulative and unknown bias could lead students to do further research into factually incorrect results to enforce a point they’re trying to make.

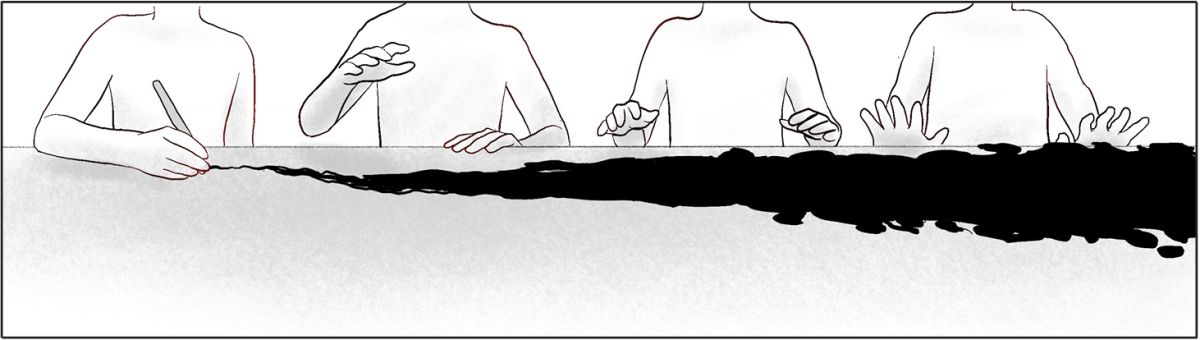

If you read this far in this editorial you might be saying to yourself, “these editors are confused. What are they even trying to say?” And that would be correct. With the complete lack of precedent (legal and ethical) available on chatbots, we find ourselves in a space of not fully understanding AI’s positives and implications. Editorials are based on the opinion of the editors, at large. It is pretty difficult to establish a narrative due to the lack of information and studies — we are pretty much in the dark. A darkness that is compounded by an extremely large amount of gray area on what is OK and not OK. Nevertheless, in all of this, we would like to finish with a final thought. As Elbert Hubbard once said in 1911,

“One machine can do the work of fifty ordinary men. No machine can do the work of one extraordinary man.”

Who are you going to be and how will you use AI?